Gazelle's Auto-Math Module

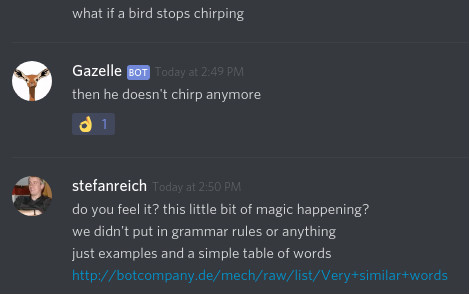

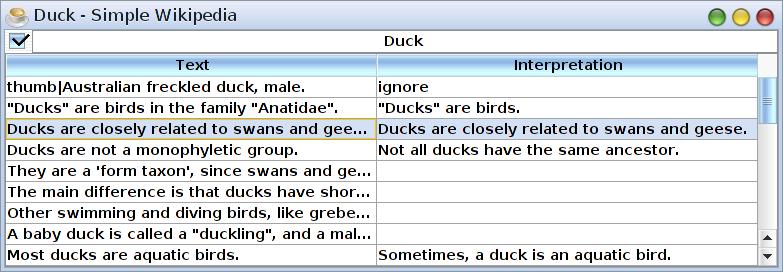

Gazelle Learns

Come join us over at Discord.

New Discord Group

Analyzing Wikipedia

Gazelle growing

A new logic engine

A logic engine is what makes machines talk.

This new one I'm making is really impressive.

The new engine merges syntax and semantics; allows humans to teach the machine how to think easily; and it doesn't require a full model of the language in question (say, English).

Surely it needs a cool code name, right? How about... uhm... "Gazelle"? "Panther"? Goldfish? Rubber duck? Something!

Either way: Expect demos soon. Until then, enjoy the chewing gazelle.

Who smokes dope?

Input:

I visited the pope. He smokes dope.

Output:

I visited {the pope} [*1]. He [1] smokes dope.([*1], [1] and the curly braces mean that "he" points to "the pope".)

Ways to eat pizza

Matt Mahoney recently gave this little example of ways in which language is ambiguous:

I ate pizza with a fork. I ate pizza with pepperoni. I ate pizza with Bob.

I was inspired to make a program to interpret these different meanings of the word "with". I used the 3 lines as training examples, then made some new examples for testing.

My program is a logic engine that takes the following rules:

// Some reasonings that everybody will understand.

// Sorry for the curly braces, we have to help out the parser a tiny bit.

// First, the 3 different cases of what "pizza with..." can mean.

I ate pizza with pepperoni.

=> {I ate pizza} and {the pizza had pepperoni on it}.

I ate pizza with Bob.

=> {I ate pizza} and {Bob was with me}.

I ate pizza with a fork.

=> I used a fork to eat pizza.

// Now some more easy rules.

I used a fork to eat pizza.

=> I used a fork.

I used a fork.

=> A fork is a tool.

The pizza had pepperoni on it.

=> Pepperoni is edible.

Bob was with me.

=> Bob is a person.

// Some VERY basic mathematical logic

$A and $B.

=> $A.

$A and $B.

=> $B.

// Tell the machine what is not plausible

Mom is edible. => fail

Mom is a tool. => fail

anchovis are a tool. => fail

anchovis are a person. => fail

ducks are a tool. => fail

ducks are a person. => fail

my hands are edible. => fail

my hands are a person. => fail

The logic engine performs some analogy reasoning using the rules stated above. Note that most of the rules don't distinguish between variable and non-variable parts, this is usually inferred automatically.

That's it! Now we give the program the following new inputs:

I ate pizza with mom. I ate pizza with anchovis. I ate pizza with ducks. I ate pizza with my hands.

...and it comes up with these clarifications:

I ate pizza with anchovis. => I ate pizza and the pizza had anchovis on it. I ate pizza with ducks. => I ate pizza and the pizza had ducks on it. I ate pizza with mom. => I ate pizza and mom was with me. I ate pizza with my hands. => I used my hands to eat pizza.

So nice! It's all correct. Sure, nobody would say "the pizza had ducks on it", but that is the most reasonable interpretation of the rather bizarre input sentence after the program eliminated the other two options (ducks as a tool or ducks as a person).

If you remove the line that says ducks are not persons, the program will correctly add the interpretation "I ate pizza and ducks were with me."

Full program in my fancy language.

In addition to the results, the program also shows some lines of reasoning, e.g. failed lines:

Interpretation: I ate pizza and the pizza had mom on it.

=> I ate pizza.

=> the pizza had mom on it.

=> mom is edible.

=> fail

and successful lines:

Interpretation: I used my hands to eat pizza.

=> I used my hands.

=> my hands are a tool.

Why did I make a specific program for this puzzle? Well, it's not a special-purpose program really. It's a general-purpose logic engine that supports problems of a certain complexity. Like a child understands everything as long as it's not too complicated. The plan is to make increasingly more capable logic engines until we can solve everything.

The program itself is <100 lines (not counting rules and input), but of course it uses some powerful library functions.

New Module Preview

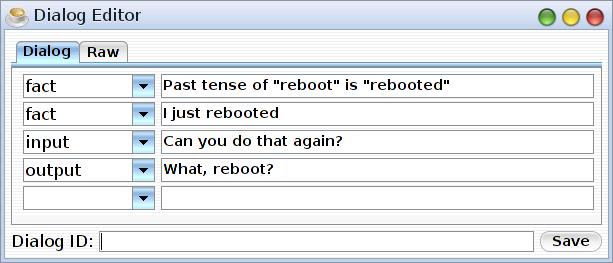

English to Rule

Input (plain English, not executable):

If something is heavy, it weighs many grams

This should be converted to this rule which is executable:

fact($something is heavy) => fact($something weighs many grams)

Actual program that does the conversion:

ai_ifToFactRule_2( "If something is heavy, it weighs many grams", litciset("it"), // pronouns litciset("something") // nouns )

As you see, the only customization the function requires is a list of pronouns and nouns.

Finally, we can test the new rule.

ai_applyFactToFactRules( "fact($something is heavy) => fact($something weighs many grams)", "John is heavy")

which yields:

[John weighs many grams]

Switching back to Chrome

YouTube is unusable on Firefox. It's probably deliberate. That's what you get from a monopoly...

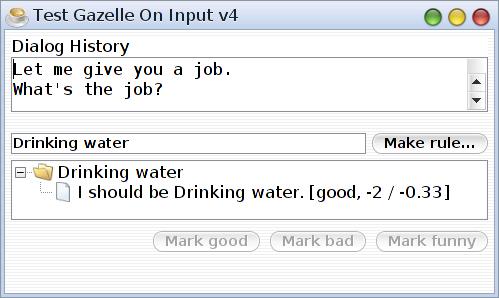

Machine Asks Questions

Artificial philosopher in the making.

Collaboration with AIRIS?

Here's an interesting project for reinforcement learning which, just possibly, may be a good addition to my software infrastructure.

There is a language gap to bridge (Python/JavaX), but that's what we went to university for, didn't we?

The Best Play List

Just thought I'd share this. A literal remix heaven by The Reflex.

JavaX vs Smalltalk [the programming language]

You see, I do know a bit of history. I was part of the Smalltalk movement 'cause yeah, that thing has a tremendous charme. Miles ahead of everything.

In fact, it just dawned on me, JavaX offers a lot of the fabulous Smalltalk experience—an integrated virtual machine where source, runnable code, GUIs and orthogonally persistent data intersect each other.

So where did Smalltalk fail? Mouse over for more info. And no, JavaX does not suffer from the same problem.

Tabs++ (or: avoiding the complexity problem)

Why a Java OS? The "Kilroy" Demo

Because a next generation OS just has more smartness.

Frantic action

I want to finish the big project now: the talking operating system.

I should be a CEO

I'm the right man for the job. I understand technology, people—and I understand the madness that money represents. Also I don't have to do this.

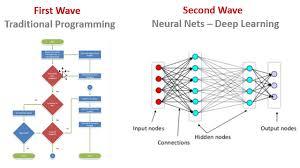

The "Third Wave of AI"

In the generally quite rotten IT industry, there is now talk of a "third wave of AI". And, interestingly, this time they float pretty much the ideas I have been pushing for the last 5 years.

Although of course it's fair to say I still have a lot of tricks in petto they didn't even conceive yet.

So is now the time for change?

![BotCompany [logo by sadietwiqs] BotCompany [logo by sadietwiqs]](https://botcompany.de/images/1102944)